- #Automated lip reading how to#

- #Automated lip reading full#

- #Automated lip reading software#

- #Automated lip reading professional#

Meanwhile, experts from Georgia Tech suppose that only 30 percent of any speech is noticeable on the lips, according to a report from News Atlas. In an earlier report, Oxford computer specialists stated that on average, hearing-impaired lip-readers can obtain 52.3 percent efficiency. The terms of lip-reading efficiency fluctuates, but one thing's for certain: it is far from an excellent method of interpreting speech. What More Can We Expect From This Lip Reading Technology? That may not sound too remarkable - but when the researchers provided the same clips to trained lip-readers, they got only 12% of words right, as reported by BBC.

#Automated lip reading software#

"Watch, Attend and Spell", as the software has been designated, can now watch the soundless conversation and get about 50% of the words accurate. Oxford University Has Its Own Lip Reading TechnologyĮxperts at Oxford announced that they've created an artificial intelligence system that can lip-read better than individuals. But a technology model for lip-reading has been bestowed to be capable of interpreting mouthed words.

#Automated lip reading full#

Here's more details about it.įor human lip-readers, context is essential in interpreting messages displaced of the full difference of their audio cues. Frank Hubner is the man behind Automated Lip Reading (ALR). Professor Zisserman commented `this project really benefitted by being able to bring together the expertise from Oxford and DeepMind'.Get to know more about Lip Reading Technology.

The research team comprised of Joon Son Chung and Professor Andrew Zisserman at Oxford, where the research was carried out, together with Dr Andrew Senior and Dr Oriol Vinyals at DeepMind. There are also a host of other applications, such as dictating instructions to a phone in a noisy environment, dubbing archival silent films, resolving multi-talker simultaneous speech and improving the performance of automated speech recognition in general.'

#Automated lip reading professional#

AI lip-reading technology would be able to enhance the accuracy and speed of speech-to-text especially in noisy environments and we encourage further research in this area and look forward to seeing new advances being made.'Ĭommenting on the potential uses for WAS Joon Son Chung, lead-author of the study and a graduate student at Oxford's Department of Engineering, said: 'Lip-reading is an impressive and challenging skill, so WAS can hopefully offer support to this task - for example, suggesting hypotheses for professional lip readers to verify using their expertise. 'It is great to see research being conducted in this area, with new breakthroughs welcomed by Action on Hearing Loss by improving accessibility for people with a hearing loss. Speaking on the tech's core value, Jesal Vishnuram, Action on Hearing Loss Technology Research Manager, said: 'Action on Hearing Loss welcomes the development of new technology that helps people who are deaf or have a hearing loss to have better access to television through superior real-time subtitling. The software could support a number of developments, including helping the hard of hearing to navigate the world around them. The machine's mistakes were small, including things like missing an "s" at the end of a word, or single letter misspellings.

The human lip-reader correctly read 12 per cent of words, while the WAS software recognised 50 per cent of the words in the dataset, without error. They found that the software system was more accurate compared to the professional.

The research team compared the ability of the machine and a human expert to work out what was being said in the silent video by focusing solely on each speaker's lip movements. The videos contained more than 118,000 sentences in total, and a vocabulary of 17,500 words.

#Automated lip reading how to#

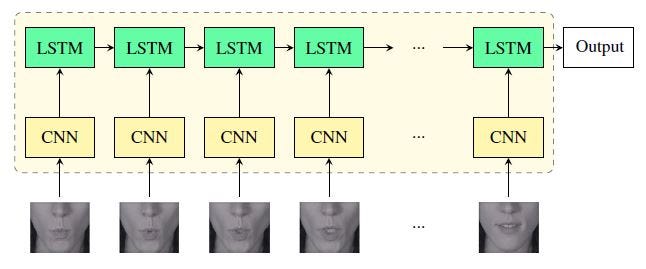

The AI system uses computer vision and machine learning methods to learn how to lip-read from a dataset made up of more than 5,000 hours of TV footage, gathered from six different programmes including Newsnight, BBC Breakfast and Question Time. Watch, Attend and Spell (WAS), is a new artificial intelligence (AI) software system that has been developed by Oxford, in collaboration with the company DeepMind.

0 kommentar(er)

0 kommentar(er)